Team members

- Dr. Juan A. Carretero

Determining the minimum distance between two objects is a problem that has been solved using many different approaches. Most methods proposed so far are, in essence, limited to solve the problem amongst convex polyhedra. Thus, to deal with concave objects, these methods partition concave objects into convex sub-objects and solve the convex problem between all possible sub-object combinations. This adds a large computational expense, especially when the concave objects in the scene are complicated, or when concave quadratically bound objects are to be linearized.

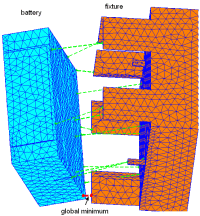

In this work, two optimization-based formulations are proposed to solve the minimum distance problem without the need for partitioning concave objects into convex sub-objects. The first one, referred to as the continuous approach, uses concepts of computational solid geometry in order to represent objects with concavities. On the other hand, in the second formulation, referred to as the combinatorial approach, the geometries of the objects are replaced by large sets of points arranged in surface meshes.

Since the optimization problem is not unimodal (i.e., has more than one local minimum point), global optimization techniques are used. Simulated Annealing and Genetic Algorithms, with constraint handling techniques such as penalty and repair strategies are used in the continuous approach. In order to eliminate the computational expense of determining the feasibility of every trial point, the combinatorial approach replaces the objects' geometry by a set of points on the surface of each object. This reduces the minimum distance problem to an unconstrained combinatorial optimization problem where the combination of points (one on each object) that minimizes the distance between objects is the solution.

Additionally, Genetic Algorithms with niche formation techniques were developed in order to allow the distance algorithm to track multiple minima.

In a series of numerical examples, a preliminary implementation of the proposed algorithms has proven to be robust and equivalent, in terms of computational efficiency, to some conventional approaches.